Twistago AI, part 3: Hard

This is the third and final part of a series in which I explain how the artificial intelligence works in my latest game, Twistago. In case you missed the first or second part, you can catch up on them here and here.

With the Normal AI able to hold its own against average players, it was time to turn to the Hard AI, called Pro in the game. We were fortunate to know a good chess player who would still easily beat the Normal AI, so if I could make an AI that he would have a good time with, it would be good enough for release. But with the Normal AI sometimes needing all its allotted thinking time to run a well-optimized minimax algorithm, what could still be improved?

The Easy AI had been smooth sailing, and the Normal AI wasn’t too hard to pull of either. But now things got difficult. I tried several radically different approaches, before I finally returned to the basics and managed to squeeze significantly better gameplay out of that.

Failed experiment 1: Neural networks

Remember how minimax works: it ensures that you optimize a certain value function over the course of the game. So far, I had been constructing this function based on some heuristics: distance to the goal, numbers of power cores in the hold, and so on. But there was no way of telling whether the function was actually any good, that is, whether it was actually representative of the AI’s chances of winning the game. So for all I knew, the AI was optimizing very efficiently for the wrong thing.

Some tedious and fruitless manual tinkering later, I realized that I was unable to come up with more suitable improvements to the value function. So why not let the machine do it for me? That’s how the idea of a neural network was born. Instead of manually coding the value function, I could replace it by a neural network. Just like with the hardcoded function, the input consists of the game state, and the output is the “value” that that state has for the current player.

To make this work, I needed a lot of ingredients:

- A way to represent the game state as an array of floating point inputs.

- A suitable size and shape for the network.

- A set of initial weights for the network.

- A way to train the network to get better as it played more.

To represent the game state as an array of floats, I assigned three input neurons to each square of the board. Each of these would either be 1 (for true) or -1 (for false). One represents whether the square contains one of the AI’s own pieces, one whether it contains an opponent’s piece, and one whether it contains a power core. I decided not to distinguish opponents from each other for now – it would be hard enough to get this working for 2 players already. The black hole and mother ships are represented in a similar way by additional neurons, for a total of over 200 inputs.

Then I needed to decide what the rest of the network would look like. In particular: how many layers, of what size, and how are they connected? Tuning these parameters seems to be as much an art as a science. I knew that my manually coded value function was highly nonlinear in places, and that neural networks need at least one hidden layer to be able to represent that. So I opted, a bit arbitrarily, for 100 hidden neurons initially, fully connected to both the input and output layer, for a total of over 20k connections.

Then it was time to initialize the network connection weights. Normally, people use randomly assigned weights, then train it. However, if I did that, all candidate networks would just end up throwing all their pieces into the black hole, and I wouldn’t be able to distinguish their quality. So instead, I bootstrapped the process by letting the network “learn” the manually coded value function. After ironing out some bugs, this process got it within 4% of the real thing. Promising!

And then on to the actual training. A classical way to train a neural network is giving it some input, observing the difference between the actual output and the expected output, and then correcting the weights accordingly. However, the whole point is that I don’t know the expected output. So some other approach was called for. Enter… the genetic algorithm!

This works as follows. We have a pool of 100 “individuals”, where each individual is a neural network with different weights. We let these play a tournament against each other, where each individual plays, say, 20 matches against randomly chosen others. We count how often each individual won, and call this number its fitness. Then comes the genetic bit: we discard the 50 least fit individuals, and let the 50 fittest ones breed with each other. Each “offspring” individual has weights that are randomly selected from either parent, and might receive some random mutations on top of that to ensure genetic diversity.

Sounds complicated? It is. And it didn’t work well at all. After a night of training, the fittest individual was still losing 85% of games from the Easy AI. And by now, the entire system was so complex, with so many parameters to tweak and a 24-hour wait before I could see the results, that I didn’t know where to even start fixing this. So eventually, I abandoned this idea.

Failed experiment 2: Monte Carlo tree search

Another way to avoid having to specify a heuristic value function is the Monte Carlo tree search algorithm, MCTS for short. MCTS was the state of the art for artificial Go players until recently, when it was surpassed by the deep learning approach used by Google in AlphaGo.

Roughly speaking, MCTS replaces the value function by a series of random playthroughs of the game, and observes how often we win. Branches of the game tree that lead to winning are explored more thoroughly, to make sure that opponents don’t have a countermove. There’s an elegant formula, called UCT, you can use for biasing your random selection of branches towards the most promising ones, so you get the most out of your limited thinking time.

I implemented a basic version of this algorithm, but found that it didn’t work very well. The first problem was that a random playthrough of a Twistago game will most likely drop all your aliens into the black hole, so none of the moves can be judged to be better or worse than another. This problem was easily fixed by prohibiting such silly moves.

But then another, more serious issue raised its head. Like all Monte Carlo methods, MCTS needs a lot of data to produce a reliable estimate. Remember that we can go through about 10k game states per second, and that a game of Twistago can easily take 200 moves or more. That means we can evaluate about 500 full playthroughs per second. Even at a reasonable branching factor of about 20, that means we have only 25 data points to judge each move by (biased towards more promising moves, but still).

Besides speed, another problem turned out to be memory. MCTS requires that you keep the evaluated parts of the game tree in memory, so you can annotate them with win and loss counts. A Twistago game state is about 500 bytes, and I estimated I could spend about 5 MB on this, leaving room for only 10k states. This would mean that there wouldn’t be enough memory to let the AI think for more than a second. For this reason, I eventually abandoned the MCTS algorithm.

Game phases and genetic algorithms

With the fancy algorithms leaving me disappointed, I decided to try and get more performance out of the minimax AI that I already had. This was in part inspired by my discovery of the amazing Chess Programming Wiki, a treasure trove of practical information, much of which is not just applicable to chess.

The first observation was that the AI does not adapt to the current “phase” of the game. Chess games are traditionally divided into an opening, middle and endgame, and different approaches are used for each. In Twistago, it makes sense to use the opening for gathering power cores while you still can, the middle for going through the black hole and blocking your opponent, and the endgame to actually land your pieces.

So instead of a single value function, I devised three, with the same structure but different relative weights assigned to things. In the early game, more weight would be given to ownership of power cores, and in the late game more weight would go to bringing the commander home. To decide which of these three game phases we’re in, I used the number of power cores still on the board (which never increases) and the number of aliens landed (which never decreases).

Tweaking the parameters of a single value function was already a tedious job. Tweaking the parameters of three interdependent ones, plus the thresholds to decide which one to use, would have been too much. But I already had some code to solve this problem. Re-enter… the genetic algorithm!

And with only around 25 parameters to tweak, instead of the thousands used in the neural network, the genetic algorithm worked brilliantly. After a night of computation (about half a million games), the newly trained Hard AI had a win rate of over 90% against the Normal AI. Victory at last!

While developing and tuning the genetic algorithm (most notably the mutation rate), I dumped each subsequent generation to a file for future inspection. While waiting for the next few generations to arrive, I wrote a little script to plot the evolution of each parameter on a chart, with the generation number on the horizontal axis and the value of the parameter on the vertical axis. That way, I could see which parameters mattered, and what their optimal value was likely to be.

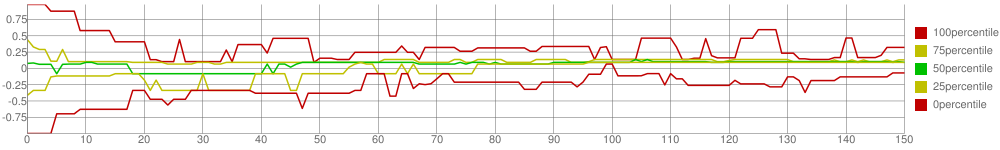

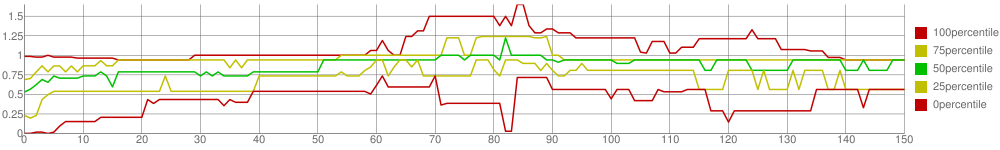

For example, this parameter turns out to have a pretty well-defined optimal value that all percentiles are converging towards:

This one, on the other hand, doesn’t seem to matter a great deal, as evidenced by the broad range of values still in the gene pool near the end:

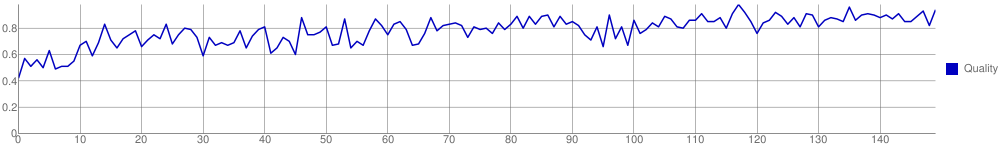

And finally, the overall “quality”, i.e. the win rate against the Normal AI, eventually plateauing:

So this gave me a measurably superior AI. Sadly, reports from human players still showed that it was getting beaten too easily. What else to do?

Iterative deepening

With the minimax algorithm, you have to choose in advance how deep to search the tree. You can’t search some branches more deeply than others, because the results would not be comparable. So I had to cater for the worst case here, a very wide tree with many branches, so that even that monster could still be searched within a few seconds. However, in the typical case, the answer would come much more quickly. Can we use that to our advantage?

The answer is obviously yes, and the algorithm is really simple. Start with a search of depth 1, and store its chosen move. If there is still enough time, start a search of depth 2. And so on. Abort the current search as soon as the timer runs out, and use the result of the deepest search that was completed.

If it’s really that simple, why hadn’t I tried it before? The main reason was that I thought I would need to reuse results from the previous, shallower search to save time in the deeper search, for instance by sorting branches optimally for best alpha-beta pruning performance. This would have been tricky to do within tight memory constraints. However, after further thought, I realized that this is not really needed: wich a branching factor around 10, the next search will take 10 times as long as the previous one, so relatively speaking, we’ve lost only about 10% of our time slot to shallow searches whose results were not eventually used. Not great, but certainly not a deal breaker. To this day, I haven’t had to made any optimizations.

But did it work? Absolutely. Especially in the endgame, where the branching factor is in the single digits, the AI played a much stronger game. Towards the very end, I spotted tree depths of over 20, which would probably allow it to see all the way to the end. Finally the experienced chess player reported that he was struggling to still beat it – although not every single game was lost. That sounds like a proper challenge for the player. Much like developing this AI has been for me!